Active Projects

Here are some of the projects I am currently working on. Some are side projects, some are client projects, and some are just for fun. I like to build things and I like to share what I learn along the way. If you have any questions about any of these projects, feel free to reach out.

📦 Flow Export

Export Salesforce Flow to Miro to collaborate with your team. Read more about the launch of Flow Export on the Salesforce AppExchange.

🤖 Make Storytime

Personalized children's stories generated by AI. This is an app I am building with my kids.

📠 Fax Online

Yea, I know. But there is a long tail for everything and believe it or not there was an underserved market for people that need to send a fax online. Some people (like me!) just need to send a one time fax.

Read more about this micro Saas project in a blog post about building an online fax service.

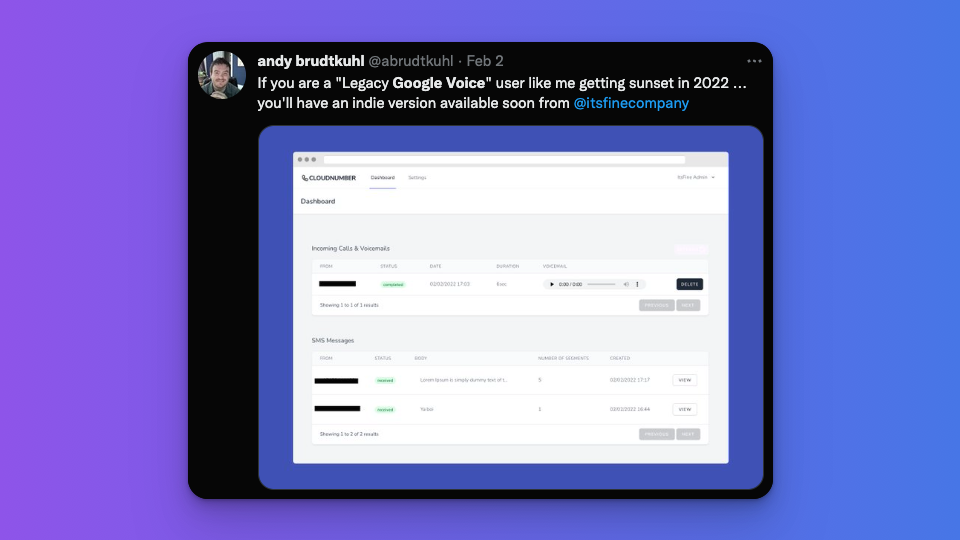

📱 Cloud Number

Cloud Number is a virtual phone app that helps you protect your privacy online. Use it to receive SMS online and keep your phone number private. It's also a great service for a freelancer or entrepreneur that wants to have a separate phone for their business for SMS and voicemail.

🥑 Free URL Indexer

Free URL Indexer is a free tool to help you index your backlinks and get them into Google faster. It's a simple tool that I built to help me with my own SEO efforts and I decided to share it with the world. It's a free tool and I don't even ask for your email address. Just paste in your URL and click the button.

👉 See all projectsBlog

November 8, 2022

Building an app for virtual phone numbers

A couple years ago we set out to build a service to send an online fax half jokingly as a way to launch a micro SaaS and play with the Stripe API and the Twilio API. It has since seen a number of record sales days and we've sent thousands of faxes. I've...

ReadDecember 20, 2022

Migrating a WordPress website

Migrating a WordPress website to a new web host can be a straightforward process, but it's important to plan and prepare in advance to ensure a smooth transition. Here are some steps you can follow...

ReadFebruary 10, 2021

Building An Online Fax Service

A goal I had in 2020 was to build and launch a micro SaaS product. What is a micro SaaS? Well, it's essentially a software as a service product but smaller in scope. A micro SaaS product is often...

ReadJanuary 23, 2019

Weekly Agtech Email Newsletter

Today I launched a new project for 2019 - an Agtech focused email newsletter. The goal is to give the audience the best information over the last week. I read a lot, rip out all the PR, add some...

ReadJanuary 19, 2019

How I Migrated WordPress to a Static Website

My first project of 2019 was to migrate this 10+ year old WordPress website to a static website. For various reasons, the stack I chose is as follows... Static Website Generator: Jigsaw CSS...

ReadNovember 15, 2018

Blockchain 101

On November 15, 2018 I spoke at the Iowa IT Symposium discussing the basics of blockchain and how it will be used in our global food supply chain. Learn the basics of blockchain technology along...

ReadOctober 31, 2017

Full Stack Marketing

Full Stack Marketing is the concept of building analytics into your tech stack. Why would you do that? Fundamentally we want to answer the question “Do you know what your users are doing?”. And...

Read