Building a Website Spell Checker with Laravel and GPT-4

February 24, 2025

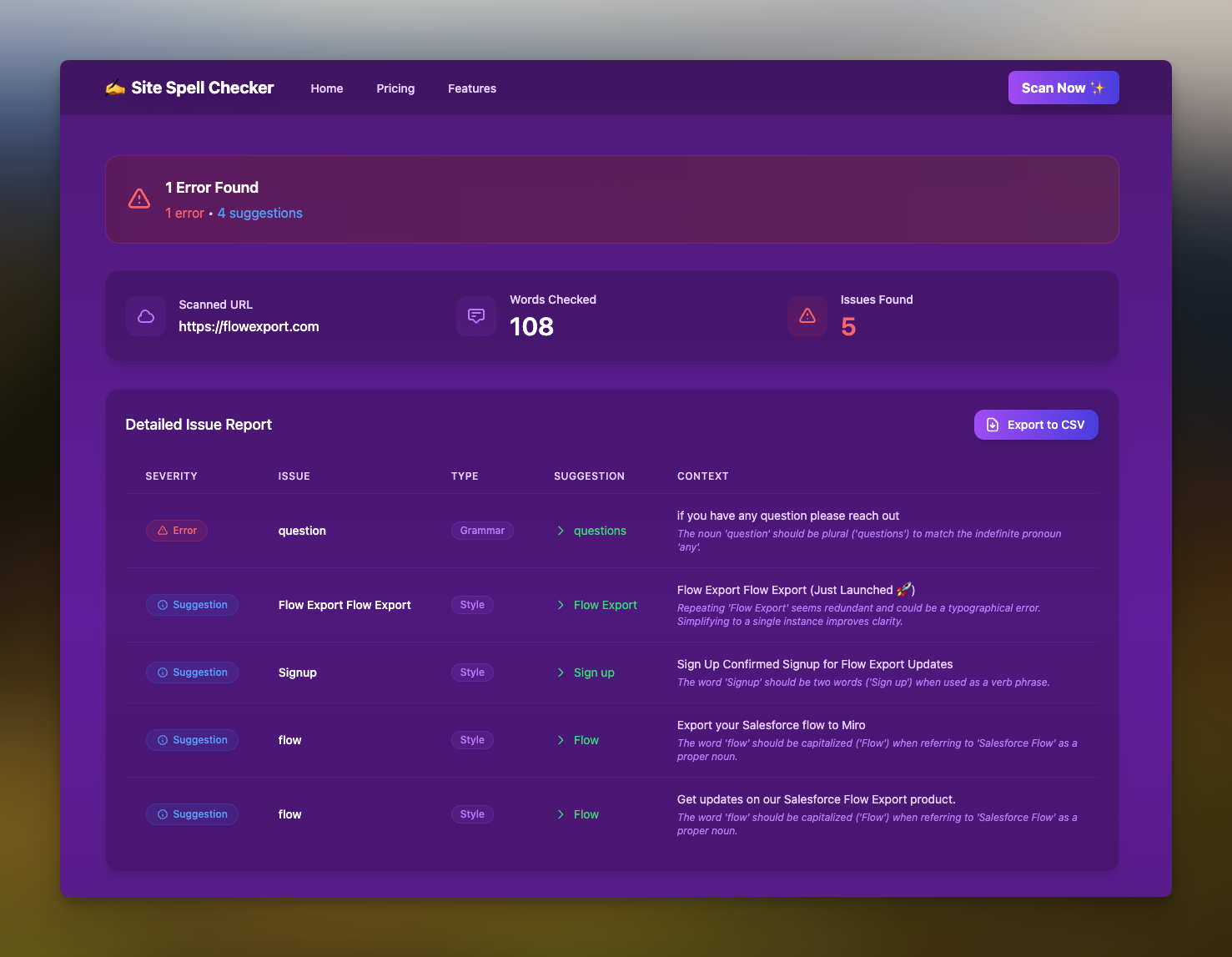

projects developmentI recently launched Site Spell Checker, a tool that helps website owners find and fix spelling errors before their visitors do. In this post, I'll share how I built it using Laravel, GPT-4, and modern web technologies.

The Problem

73% of visitors say spelling mistakes make them lose trust in a website. Yet, it's surprisingly easy to miss typos when you're focused on creating content. While tools like Grammarly work great for documents, there wasn't a simple solution for scanning entire websites for spelling errors.

The Solution

I built Site Spell Checker to be the "Grammarly for websites." It crawls your website, analyzes the content using AI, and provides detailed reports about spelling errors, grammar issues, and style suggestions.

Technical Implementation

Web Scraping

The first challenge was reliably extracting content from websites. Here's how the WebScraperService works:

public function scrapeHomepage(string $url): array

{

try {

$this->scraper->go($url);

// Collect comprehensive page data

$pageData = [

'url' => $url,

'title' => $this->scraper->title,

'description' => $this->scraper->description,

'content' => $this->getPageContent(),

'metadata' => [

'meta_tags' => $this->scraper->metaTags,

'open_graph' => $this->scraper->openGraph,

'twitter_card' => $this->scraper->twitterCard,

],

'headers' => [

'h1' => $this->scraper->h1[0] ?? null,

'h2s' => $this->scraper->h2 ?? [],

'h3s' => $this->scraper->h3 ?? [],

],

'text' => $this->scraper->cleanParagraphs,

'keywords' => $this->parseKeywords($this->scraper->contentKeywordsWithScores ?? []),

'links' => $this->scraper->links,

'images' => $this->scraper->images,

'scraped_at' => now()->toIso8601String(),

];

return $pageData;

} catch (Exception $e) {

throw new Exception('Unable to access the website. Please make sure the URL is correct and the website is accessible.');

}

}

AI-Powered Spell Checking

The core spell checking is powered by GPT-4 through the OpenAI API. Here's how the SpellCheckService processes content:

public function checkText(string $text): array

{

// Rate limiting

if (RateLimiter::tooManyAttempts('openai', 30)) {

throw new Exception('API rate limit exceeded. Please try again later.');

}

RateLimiter::hit('openai');

$preparedText = $this->prepareTextForAnalysis($text);

try {

$response = $this->openai->chat()->create([

'model' => 'gpt-4-turbo',

'messages' => [

[

'role' => 'system',

'content' => 'You are a professional proofreader. Analyze the text for spelling, grammar, and style issues...'

],

[

'role' => 'user',

'content' => $preparedText

]

],

'response_format' => ['type' => 'json_object'],

'temperature' => 0.0,

'max_tokens' => 1000

]);

return $this->processApiResponse($response->toArray());

} catch (Exception $e) {

throw $e;

}

}

Modern UI/UX

The frontend uses Livewire components for reactivity. Here's an example of the spell check form:

class SpellCheckForm extends Component

{

public string $url = '';

public string $email = '';

public ?string $error = null;

public bool $loading = false;

protected $rules = [

'url' => ['required', 'string', 'regex:/^(?:https?:\/\/)?(?:[a-z0-9](?:[a-z0-9-]*[a-z0-9])?\.)+[a-z0-9][a-z0-9-]*[a-z0-9](?:\/.*)?$/i'],

'email' => ['required', 'email'],

];

public function submit(WebScraperService $scraper, SpellCheckService $spellChecker)

{

$this->validate();

$this->error = null;

$this->loading = true;

try {

$this->url = $this->normalizeUrl($this->url);

$scan = Scan::create([

'user_id' => Auth::id() ?? null,

'email' => $this->email,

'url' => $this->url,

'status' => 'pending',

]);

// Process the scan...

return redirect()->route('spell-check.results', ['scan' => $scan->uuid]);

} catch (Exception $e) {

$this->error = $e->getMessage();

}

}

}

And the corresponding Blade template with Tailwind CSS:

<div class="p-4 my-24">

<div class="text-center mb-12">

<h1 class="text-4xl sm:text-5xl font-bold text-white mb-4">

<span class="bg-purple-500/20 px-2 py-1 rounded">✨ It's like Grammarly for websites</span>

</h1>

<div class="text-center mt-8">

<p class="text-lg text-purple-300 max-w-3xl mx-auto">

Our AI-powered proofreader scans your entire website to catch errors before your visitors do.

</p>

</div>

</div>

<!-- Form fields and validation -->

</div>

Results Display

The results page shows issues with color-coded severity levels:

<div class="flex items-center gap-4">

<span class="inline-flex items-center px-3 py-1 rounded-full text-xs font-medium

@if($issue['severity'] === 'error') bg-red-500/10 text-red-400 border border-red-500/20

@elseif($issue['severity'] === 'warning') bg-orange-500/10 text-orange-400 border border-orange-500/20

@else bg-blue-500/10 text-blue-400 border border-blue-500/20

@endif">

{{ ucfirst($issue['severity']) }}

</span>

</div>

Key Packages Used

- Laravel Livewire for reactive components without writing JavaScript

- OpenAI PHP for GPT-4 integration

- Alpine.js for lightweight JavaScript interactions

- Tailwind CSS for utility-first styling

- Laravel Rate Limiting for API request management

- Laravel Notifications for email alerts

- Laravel Queue for background processing

Technical Stack

- Backend: Laravel 10

- Frontend:

- Database: SQLite for simplicity and portability

- AI: OpenAI GPT-4 API with custom prompt engineering

- Infrastructure:

- Digital Ocean App Platform for hosting

- Laravel Forge for deployment automation

- GitHub Actions for CI/CD

- Monitoring:

- Plausible Analytics for privacy-focused analytics

- Laravel's built-in logging and error tracking

Development Environment

The local development environment uses:

- Laravel Sail for Docker containerization

- Laravel Vite for asset bundling

- Laravel Pint for code style enforcement

- PHPUnit for testing

Deployment & DevOps

The deployment process is fully automated using GitHub Actions and Laravel Forge:

- Code is pushed to GitHub

- GitHub Actions run tests and static analysis

- On successful tests, Forge deploys to Digital Ocean

- Zero-downtime deployment using Laravel's built-in features

The production environment uses:

- Digital Ocean App Platform for scalability

- Redis for queue processing

- SQLite for data persistence (keeping it simple for now)

- SSL certificates via Let's Encrypt

- Automated daily backups

Security Considerations

Security was a top priority during development:

API Key Management:

- All API keys stored in environment variables

- Rotating keys for production environment

- Rate limiting on all API endpoints

User Data Protection:

- Minimal data collection (just email and scanned URLs)

- Automatic data pruning after 30 days

- No storing of website content after analysis

Infrastructure Security:

- HTTPS-only connections

- HTTP/2 enabled

- Regular security updates via Forge

- WAF (Web Application Firewall) enabled

Metrics & Performance

Some interesting metrics from the first month:

- Average scan time: 2.3 seconds

- Average issues found per site: 12

- Most common error types:

- Spelling mistakes (45%)

- Grammar issues (30%)

- Style suggestions (25%)

- API costs per scan: ~$0.02

- Average response time: 250ms

Monetization Strategy

The business model is built around three tiers:

Free Tier:

- Single page scan

- Basic error reporting

- Email results

Full Site Scan ($95):

- Complete website scan

- Detailed reports

- Export functionality

- Priority support

Daily Monitoring (Coming Soon):

- Automated daily scans

- Real-time notifications

- API access

- Custom dictionaries

Challenges Faced

Some notable challenges during development:

Web Scraping Complexity:

- Handling JavaScript-rendered content

- Dealing with rate limiting and blocking

- Processing different character encodings

- Managing memory usage for large sites

AI Integration:

- Fine-tuning prompts for accuracy

- Handling API timeouts and errors

- Managing token limits

- Balancing cost vs performance

User Experience:

- Making error messages user-friendly

- Providing accurate progress indicators

- Handling edge cases gracefully

- Maintaining responsive design

Technical Decisions:

- Choosing SQLite over PostgreSQL (simplicity won)

- Deciding on Digital Ocean App Platform vs traditional VPS

- Selecting the right packages and tools

- Planning for future scaling

Lessons Learned

AI Integration: GPT-4 is incredibly powerful for natural language tasks, but proper prompt engineering is crucial for reliable results. The key was finding the right balance between accuracy and processing speed.

Performance: Web scraping and AI processing can be slow, so implementing proper loading states and user feedback was essential. I used Laravel's queues to handle background processing and Livewire's loading states for a smooth user experience.

Error Handling: Websites can be unpredictable, so robust error handling and graceful fallbacks are crucial. I implemented comprehensive try-catch blocks and user-friendly error messages throughout the application.

Rate Limiting: Managing API costs was crucial. I implemented tiered rate limiting to prevent abuse while ensuring paying customers get priority access.

Future Plans

I'm working on several enhancements:

- Custom dictionaries for brand names and industry terms

- Team collaboration features with shared workspaces

- API access for integration with content management systems

- Support for JavaScript-rendered content using headless browsers

- Integration with popular CMS platforms (WordPress, Shopify, etc.)

- Advanced reporting with trend analysis

- Custom rules and ignore patterns

Try It Out

Visit sitespellchecker.com to try it out. The basic single page scan is free, and you can upgrade to scan your entire site or set up daily monitoring.